Blog

AI in BFSI: Revolutionizing risk management

By NETSOL Technologies , on October 7, 2025

Explore how AI is transforming risk management in GCC’s BFSI sector. Learn key challenges, governance needs, and how NETSOL empowers compliant AI adoption.

Artificial intelligence in BFSI market is not just another wave of technology. It’s a boardroom-level agenda that redefines how financial institutions anticipate, measure, and act on risk.

In recent years, the Gulf Cooperation Council (GCC) countries have emerged as hotbeds of innovation in financial services. Fueled by abundant capital, supportive governments, and digital transformation agendas under national visions (e.g. Saudi Vision 2030, UAE Centennial 2071), banks and insurers across the GCC are increasingly turning to artificial intelligence (AI) to strengthen risk management. From predicting credit defaults to flagging fraud in real time, AI is no longer a futuristic concept for GCC’s BFSI sector. It is rapidly becoming a core strategic tool. As the region grows more interconnected, the risks also escalate, making robust, intelligent risk frameworks not just desirable, but essential for competitiveness and stability.

In order to better understand varying usage profiles across Financial Services, a study by Cambridge Judge Business School further separates AI adopters by different application domains.

Risk management currently represents the leading AI implementation area, followed by the generation of revenue potential through new products and processes. However, according to implementation plans and current implementation statistics, within two years AI will be most widely used for revenue generation

Considering the size of the opportunity, the broader GCC risk management market across industries including BFSI was valued at USD 257 million in 2024. It is forecasted to grow to USD 874 million by 2033, at a compound annual growth rate (CAGR) of about 14.2%.

For leaders in risk management along with CTOs and COOs, the central question isn’t whether AI matters, but how quickly it will decide who leads and who lags.

AI in BFSI is no longer limited to efficiency gains or fraud detection. It is about market positioning, regulatory trust, and leveraging risk management as a competitive advantage.

Executives who fail to recognize this shift risk eroding not just operational KPIs but enterprise value itself.

Here's the thing!

AI will shift decision-making power. That shift creates value. It also creates vulnerabilities. If you are a business leader, your job is not to chase every model. It is to choose the right problems, measure the impact, and make integration audit-ready.

Why now? Market, regulators, systemic risk

Source: Cambridge Judge Business School

One of the greatest challenges in GCC markets is the diversity of regulatory environments and risk profiles. For example, what constitutes “acceptable model risk” or “data privacy” in Qatar may be different from what is stipulated in Oman or Bahrain. AI in risk management, whether for credit risk, market risk, operational risk, fraud, anti-money laundering (AML), or cybersecurity offers significant upside. They offer the ability to process massive volumes of transaction data, customer behavior, and external signals (such as commodity price shifts, oil demand, or geopolitical events) to spot emerging threats. However, deploying AI effectively also means managing model-bias (especially when models are trained on non-local data), ensuring explainability (so regulators in the GCC can audit and trust decisions), and building up institutional capacity (skilled AI-risk frameworks, internal audit, alignment with Sharia compliance as applicable).

Adoption is accelerating. Banks and insurers are moving from pilots to scaled services. Generative AI is making new workflows possible in compliance, credit, and fraud detection.

Gen AI has the potential to revolutionize the way that banks manage risks over the next three to five years.” -Mckinsey

Gen AI has the potential to revolutionize the way that banks manage risks over the next three to five years.” -Mckinsey

This is all possible thanks to AI-powered risk management, which has been widely adopted.

At the same time, supervisors are sharpening their view. They worry about model opacity and vendor concentration. Explainability is top of the agenda for enterprises and SMBs. Authorities want auditable trails and demonstrable controls, not just better metrics.

Take, for instance, a GCC bank that employs AI to monitor suspicious transactions across its network. Using machine-learning, it can detect anomalous patterns far faster than traditional rule-based systems. But without localizing thresholds, the model might generate too many false positives (over-alerting) or, worse, miss culturally specific or region-specific fraud tactics. Another example is credit scoring in more frontier or retail segments: many customers in GCC countries are underbanked or have limited credit history. Here, AI models that incorporate alternative data (mobile payments, utility bills, behavioral data) can help bridge the gap but only if data quality, privacy, and fairness concerns are properly addressed.

In the UAE, which often leads the GCC in tech adoption, almost half (49%) of finance teams already use AI in operational roles, including risk management, predictive modeling, and fraud detection.

What this really means is simple! If your AI programs lack governance, they will become a regulatory and operational liability rather than an asset.

Build for control first. Capture value second.

Before we dive into the solutions, it’s essential to recognize the reality senior technology and operations leaders face every day. Their decisions directly shape business performance and the consequences of those decisions are tangible and measurable. With that context in mind, let’s dive into the seven hard outcomes that today’s business leaders must tackle head-on.

Seven hard outcomes business leaders face

Despite years of investment, risk management in BFSI still struggles with operational blind spots. CTOs and COOs face recurring challenges that drain resources, weaken resilience, and expose institutions to regulatory heat. Here are seven pain points demanding urgent transformation.

1. False positives in AML and fraud systems

High false positives not only waste analyst time but also hinder effective investigation. They create friction for customers, delay onboarding, and increase costs.

AI in risk management for finance must focus on smarter transaction scoring to reduce manual reviews while preserving security and trust.

2. Opaque credit models causing provisioning shocks

Legacy credit risk models often behave like black boxes. Sudden provisioning changes erode confidence and impact balance sheets.

AI-powered risk management provides explainability, transparency, and predictive accuracy, enabling financial leaders to prepare proactively rather than reactively after damage has occurred.

Explainability poses significant challenges and issues for financial institutions and regulators.” -Bank for International Settlements

Explainability poses significant challenges and issues for financial institutions and regulators.” -Bank for International Settlements

3. Audit failures and regulatory remediation

Every failed audit is costly, not just financially but also reputationally. Regulators expect traceable models and accountable governance.

Embracing explainable AI means fewer surprises and stronger alignment with revolutionizing risk management in banking standards, turning compliance into a strategic advantage instead of a recurring pain.

4. Vendor concentration and third-party reliance

Over-reliance on a single vendor, platform, or model creates systemic vulnerability. A single outage or compliance breach can have a ripple effect across the entire enterprise.

Diversified, modular adoption of AI in BFSI mitigates risk, ensuring resilience and continuity under regulatory and operational stress.

Specific vulnerabilities may arise from the complexity and opacity of AI models, inadequate risk management frameworks to account for AI risks and interconnections that emerge as many market participants rely on the same data and models” -U.S. Treasury Secretary Janet Yellen

Specific vulnerabilities may arise from the complexity and opacity of AI models, inadequate risk management frameworks to account for AI risks and interconnections that emerge as many market participants rely on the same data and models” -U.S. Treasury Secretary Janet Yellen

5. Model drift during macro shocks

Pandemics, inflation spikes, or geopolitical events expose the fragility of models. Traditional systems often fail under pressure, delivering inaccurate risk signals when they are needed most.

AI with adaptive learning and drift detection ensures models remain calibrated, protecting capital and decision-making integrity during periods of uncertainty.

6. Broken data lineage and poor provenance

Without strong lineage and provenance, root-cause analysis becomes guesswork. Boards demand answers, regulators demand evidence, and customers demand trust.

Building AI in BFSI market capabilities around traceability creates reliable pipelines that withstand scrutiny, improve governance, and accelerate investigations when anomalies appear.

7. Slow redeployment and high MTTR

When a model fails, every hour of downtime magnifies risk exposure. Long redeployment cycles slow recovery, increasing the mean time to detect and resolve issues.

AI-powered risk management requires automated pipelines for rapid redeployment, ensuring operations recover in days, not weeks.

This concludes Part 1 of our series on AI’s role in reshaping risk management for the BFSI sector. In Part 2, we will move from strategic context to practical execution showcasing real-world AI use cases, the core capabilities risk leaders must build, and the safeguards needed for responsible adoption. We will also outline how NETSOL enables financial institutions to harness AI with confidence and compliance.

Related blogs

Blog

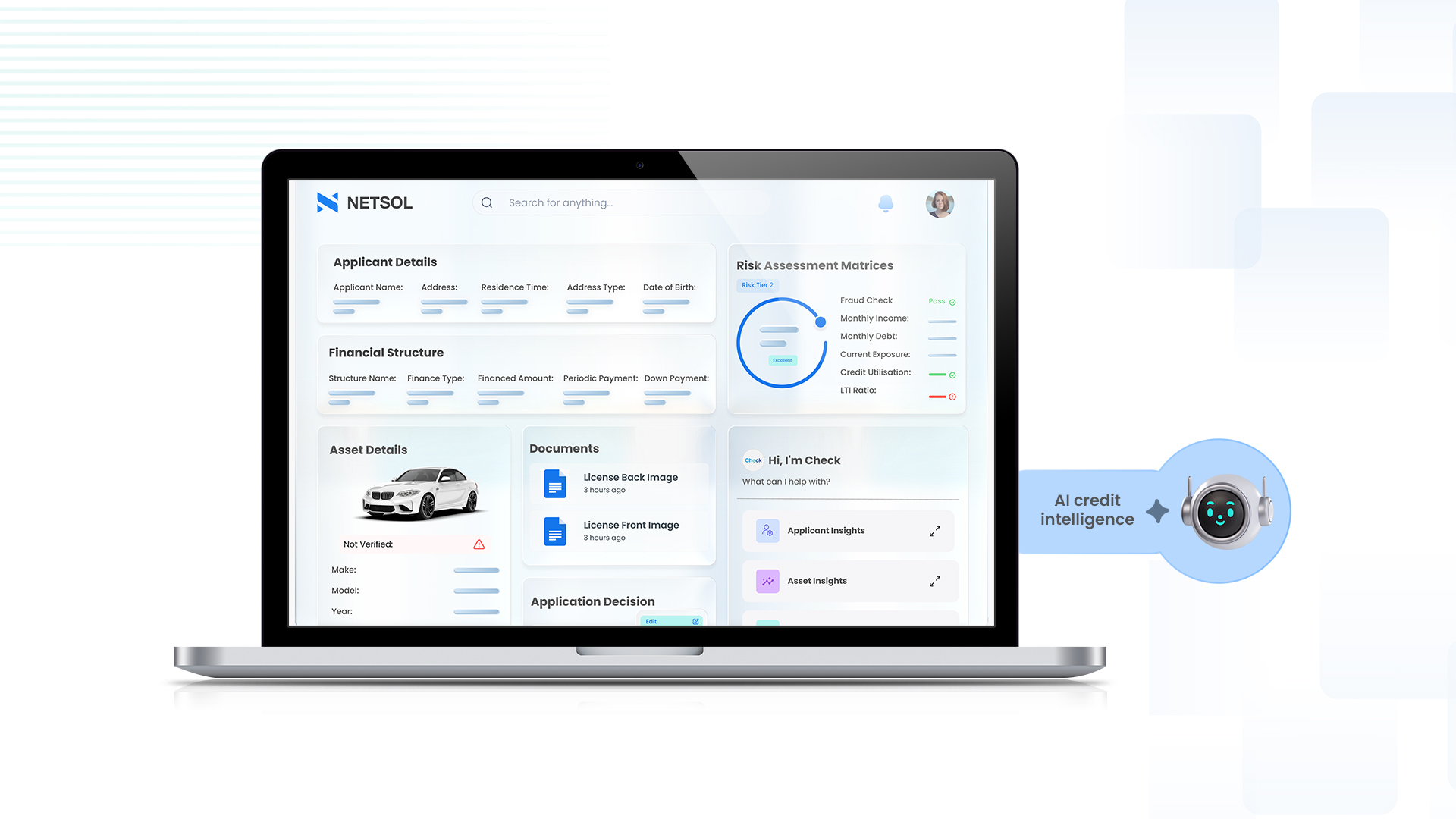

From credit checks to credit intelligence: How AI is redefining underwriting for captives

Blog

Shared financing models for high-value assets unlocking Indonesia’s next wave of growth

Blog