Blog

Why human-in-the-loop is critical to successful AI strategies in asset finance

By Kamran Khalid Chief Product & Delivery Officer,, on May 30, 2025

Human-in-the-Loop (HITL) ensures responsible, transparent AI in asset finance by combining automation with expert oversight. It helps manage risk, meet regulatory demands, and improve decision quality across complex, high-value lending.

The rapid rise of AI in asset finance has revolutionized processes such as credit decision-making, risk assessment, and customer servicing. McKinsey's 2024 survey, "The State of AI in early 2024," indicates that 65% of organizations are regularly using generative AI in at least one business function, up from 33% the previous year.

This adoption spans various industries, including financial services, where AI is increasingly embedded to enhance operations and customer experiences.

Yet, despite these technological advances, asset finance remains a complex, highly regulated domain involving significant financial risk, requiring continuous human expertise to oversee and validate AI-driven outcomes.

Notable failures illustrate this necessity: For example, in February 2023, the UK's Financial Conduct Authority (FCA) reprimanded Amigo Loans for inadequate affordability checks, which were influenced by a flawed algorithm. This oversight led to vulnerable customers receiving unaffordable loans. Although the FCA considered imposing a $98 million fine, it refrained due to Amigo's financial difficulties and a court-mandated customer redress program. This case underscores the risks associated with unregulated AI systems in lending and the importance of human oversight.

Human-in-the-loop (HITL) is thus a practical solution that bridges AI automation and human judgment, ensuring responsible, transparent, and adaptable lending and servicing decisions in asset finance.

Current trends in AI adoption in asset finance

- Increasing use cases

AI now powers automated credit scoring, residual value (RV) forecasting for leased assets, fraud detection, portfolio risk analytics, and dynamic pricing.

According to Ernst & Young LLP (EY US) late 2023 survey of financial services executives found that 99% of leaders have deployed AI in some fashion, and every single respondent is either using or planning to use generative AI in their organization.

The explosion of generative AI in the past year has only accelerated this trend; one-third of companies are already using generative AI regularly in at least one business function, and 40% plan to increase overall AI investments as a result.

PwC's 2024 Responsible AI Survey found that 58% of financial services firms are piloting or deploying generative AI, with 52% using AI specifically in financial reporting.

- Hybrid human-AI models

Full automation is rare; firms prefer hybrid workflows where AI handles routine data processing, but humans oversee complex decisions, especially for high-value or non-standard asset classes.

Among 64 organizations surveyed by CB Insights in December 2024, two-thirds indicated they are using or will be using AI agents in customer support in the next 12 months.

- Regional variations

North America and Europe lead adoption, driven by fintech ecosystems and regulatory clarity. APAC is accelerating adoption, especially in Singapore, Hong Kong, and Australia, where fintech hubs drive innovation and regulatory sandboxes facilitate AI experimentation.

Limitations of pure AI-driven models in asset finance

1. Regulatory compliance

- Regulatory frameworks globally emphasize human oversight for explainability and accountability. The UK FCA’s guidance on AI mandates explainable AI models with explicit human review for high-risk decisions. The EU AI Act draft requires human-in-the-loop mechanisms for credit underwriting systems classified as “high risk.”

- In the US, the Consumer Financial Protection Bureau (CFPB) demands transparent credit decision explanations, supporting HITL to ensure compliance and mitigate bias.

- In Asia, regulators like the Monetary Authority of Singapore (MAS) promote human intervention to prevent algorithmic discrimination and protect consumer rights.

2. Complex credit decisions

AI struggles with niche financing cases such as aviation equipment leasing, construction machinery financing, or medical device leasing, where market conditions, asset lifecycle nuances, and regulatory constraints require human expertise. For instance, aviation asset finance demands deep knowledge of aircraft maintenance cycles and residual value fluctuations that AI models may not fully capture.

3. GenAI complexity

Generative AI models, with hundreds of billions of parameters, present explainability challenges. Emerging reasoning models attempt to trace AI's "chain of thought," but in regulated sectors like finance, transparent and auditable human oversight remains indispensable.

Why AI models are not fully mature or bias-free

Data limitations: AI relies on historical datasets, which can be outdated or incomplete. For instance, the Financial Stability Board’s 2024 review of AI in finance highlights “model risk, data quality and governance” as a core vulnerability, warning that the data-hungry nature of modern AI means the supply of high-quality, real-world data could be exhausted as early as 2026, forcing models to depend on opaque or stale inputs and magnifying error rates.

In IBM’s global AI in Action 2024 study, 42 % of the 2,000 organizations surveyed reported “insufficient proprietary data” as a top barrier to customizing generative-AI models, and 45% flagged concerns about data accuracy or bias.

Bias in training data: Legacy biases in lending (e.g., socio-demographic disparities) risk being embedded and perpetuated by AI unless human experts actively monitor and correct for these biases.

Algorithmic opacity: Deep learning models function as “black boxes,” limiting interpretability without human explanation layers.

Rapid market changes: Sudden policy shifts (e.g., Brexit, new EV subsidies) require human judgment for timely reassessment beyond AI’s historical data patterns.

High-stakes decisions: In asset finance, errors can cause multi-million-dollar losses, mandating rigorous human checks to mitigate risks.

Human oversight risks: While human review is crucial, unconscious biases and errors in data selection or oversight can also affect outcomes, emphasizing the need for structured HITL frameworks and ongoing training.

The strategic value of human expertise

1. Contextual risk management

Humans excel at interpreting qualitative factors AI misses, geopolitical events, regulatory changes, or customer sentiment shifts.

Example: A March 2025 Salata Institute brief shows that scrapping federal EV tax credits would knock six percentage points off the EV share of 2030 new-car sales, and a combined rollback of credits and charger subsidies would slash it by 12.7 percentage points. Human portfolio teams have to re-price residuals the moment such proposals surface; an AI trained on yesterday’s data won’t react until after losses start appearing.

2. Adaptive decision-making in niche asset classes

- Specialized knowledge is required in sectors such as solar equipment finance, where subsidy changes dramatically affect creditworthiness. Human credit teams dynamically adjust decisions based on evolving policy landscapes.

- HITL ensures AI recommendations are reviewed and contextualized by experts, preserving compliance and optimizing financial outcomes.

3. Handling exceptions and complex servicing

- AI flags potential restructuring or defaults, but humans evaluate final actions, especially in loan servicing exceptions.

- Dynamic RV estimation for emerging asset classes like EVs and heavy equipment requires continuous human calibration to reflect evolving market conditions.

Embedding HITL into future-proof product architectures

1. API-first modular design

AI scoring engines, override modules, and exception handling operate as independent microservices, facilitating flexible integration at any credit or servicing stage.

2. MACH-compliant flexibility

Platforms adhering to Microservices, API-first, Cloud-native, Headless design enable selective HITL deployment by asset class or region without costly rewrites.

3. Out-of-the-box HITL enablers

Platforms should include:

- Pre-configured decision thresholds for human escalation

- Built-in, immutable audit trails

- Role-based override capabilities

- Configurable dashboards for efficient exception review

4. Interoperability with workflow tools

Smooth interoperability with platforms like ServiceNow, Jira, and Slack facilitates human review triggers and collaboration.

Implementing human-in-the-loop successfully

Human-centric design: Systems must be transparent, auditable, and clearly communicate to users when AI is involved, with easy access to human review on demand.

Clear decision boundaries: Define explicit rules for when AI decisions require human escalation, e.g., commercial leases above $ 675,000 or loans involving non-standard asset classes.

Regular feedback loops: Continuous human review to refine AI models is critical. For instance, Lloyds publicly states that all credit-scoring models must pass human ethics and bias checks before deployment and are subject to ongoing monitoring by its AI Centre of Excellence.

Exception management frameworks: Use specialized dashboards for humans to efficiently review and override AI outputs, as practiced by firms like VW Financial Services.

Training and change management: Equip finance teams with skills to critically interpret AI insights, enabling informed decisions and reducing reliance on automation alone.

Proven outcomes of integrating human-in-the-loop

Reduced risk exposure: Combined human-AI underwriting has demonstrated measurable reductions in default rates.

Enhanced customer trust: Transparent, explainable decisions reduce disputes, improve customer satisfaction, and boost retention.

Improved AI accuracy: Iterative human feedback drives continuous refinement of AI models, as seen in improved residual value forecasting after underwriter adjustments.

Operational efficiency: Broker portals with HITL workflows facilitate manual overrides and compliance checks, speeding decision turnaround without sacrificing accuracy.

Managing HITL challenges specific to asset finance

Scaling vs. quality: Deploy HITL selectively on high-risk loans to balance efficiency with oversight quality.

Trust gap: Address skepticism among finance teams via transparency and clear governance mechanisms.

Bias mitigation: Monitor human overrides for bias risk, ensuring fairness complements AI's data-driven insights.

Performance metrics: Track KPIs like default rates, cycle times, and regulatory incidents to measure HITL effectiveness.

Implication on human capital: Fostering collaborative human-AI approaches

Human-AI collaboration: Asset managers should leverage GenAI for heavy data processing while applying domain expertise for interpretation, ethical oversight, and application of insights.

Upskilling & training: As GenAI platforms like ChatGPT exceed 200 million weekly active users, organizations must provide targeted training in AI platforms, regulatory compliance, and effective prompt engineering to maximize ROI.

Buyer lens: Key concerns of decision-makers - And how HITL solves them

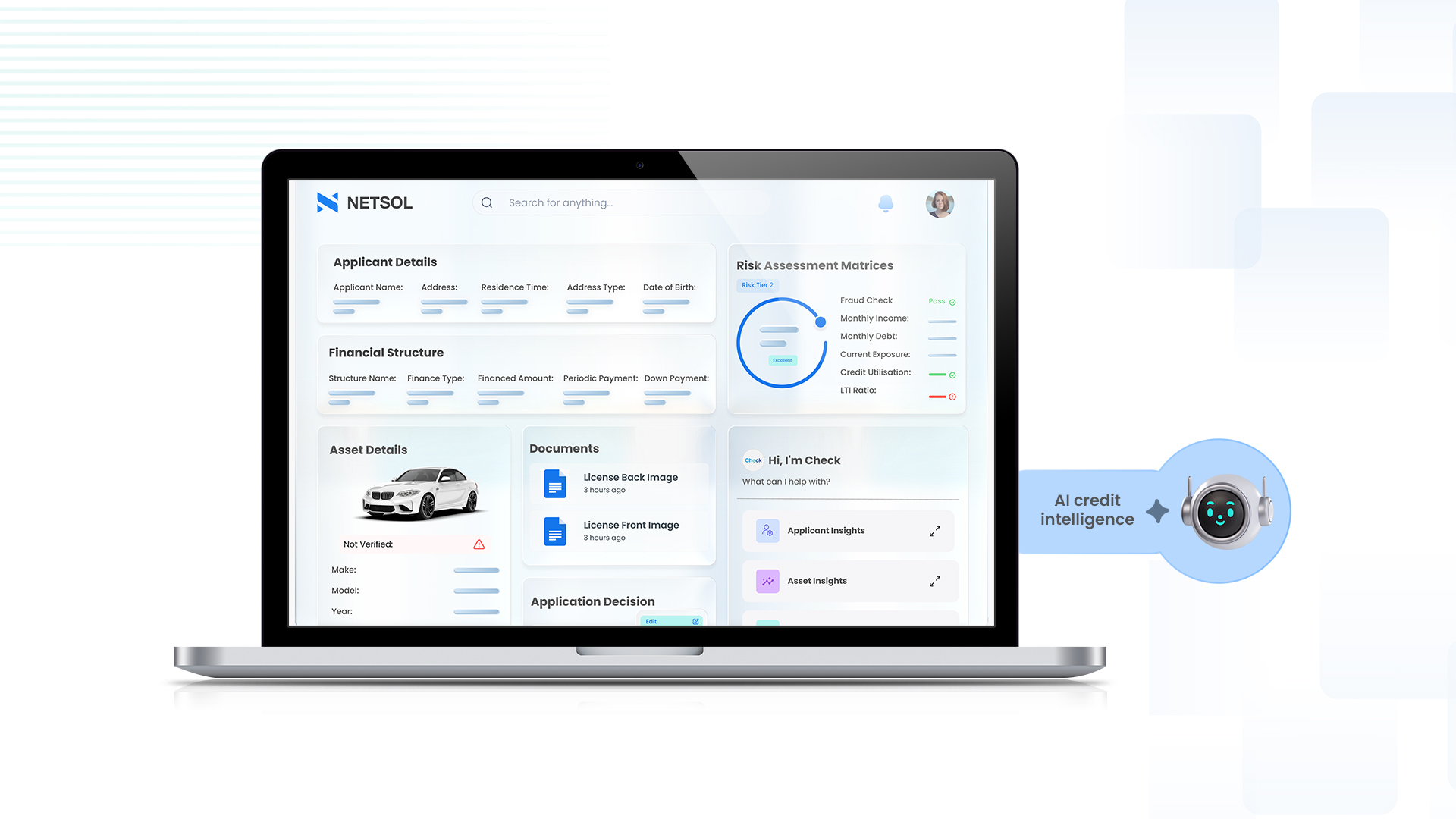

Human-in-the-loop – The NETSOL way

“The future lies not in replacing humans with AI, but in building systems where AI augments domain experts through composable, explainable, and adaptive intelligence, ensuring speed, trust, and control.”

Conclusion

Purely automated AI, while efficient, remains insufficient without expert human judgment in asset finance. Strategic investment in Human-in-the-Loop frameworks ensures the balance of cutting-edge technology with the critical oversight of human expertise, preserving compliance, fairness, and financial soundness.

Enduring human capabilities, curiosity, empathy, critical thinking, and collaboration remain vital. Finance professionals must develop fluency in generative AI, including:

- Effective prompt engineering for precise outcomes

- Detecting and mitigating bias

- Validating AI outputs and ongoing model monitoring

These competencies will empower firms to harness AI’s full potential responsibly and sustainably.

NETSOL’s Transcend AI Labs is built around the very principles outlined above. It delivers API-first AI modules that:

- Route low-confidence predictions to human reviewers via configurable thresholds, ensuring critical decisions always get expert oversight.

- Provide role-based override dashboards and immutable audit trails, satisfying regulators from the FCA to MAS and the CFPB.

- Capture every human correction as training data so models continuously improve while exception queues shrink.

This human-in-the-loop design is already helping asset-finance lenders across North America, Europe, and APAC cut approval times, reduce default risk, and stay audit-ready.

Book a 30-minute strategy call with our HITL specialists to discover how fast you can move from proof-of-concept to production without sacrificing compliance, transparency, or control.

Harness AI speed and human judgement because, in asset finance, that’s the only route to growth you can trust.

Related blogs

Blog

From credit checks to credit intelligence: How AI is redefining underwriting for captives

Blog

Shared financing models for high-value assets unlocking Indonesia’s next wave of growth

Blog